"Design me a living room!"

Abstract

Interior design allows us to be who we are and live how we want -- each design is as unique as our distinct personality. However, it is not trivial for non-professionals to express and materialize this since it requires aligning functional and visual expectations with the constraints of physical space; this renders interior design a luxury.

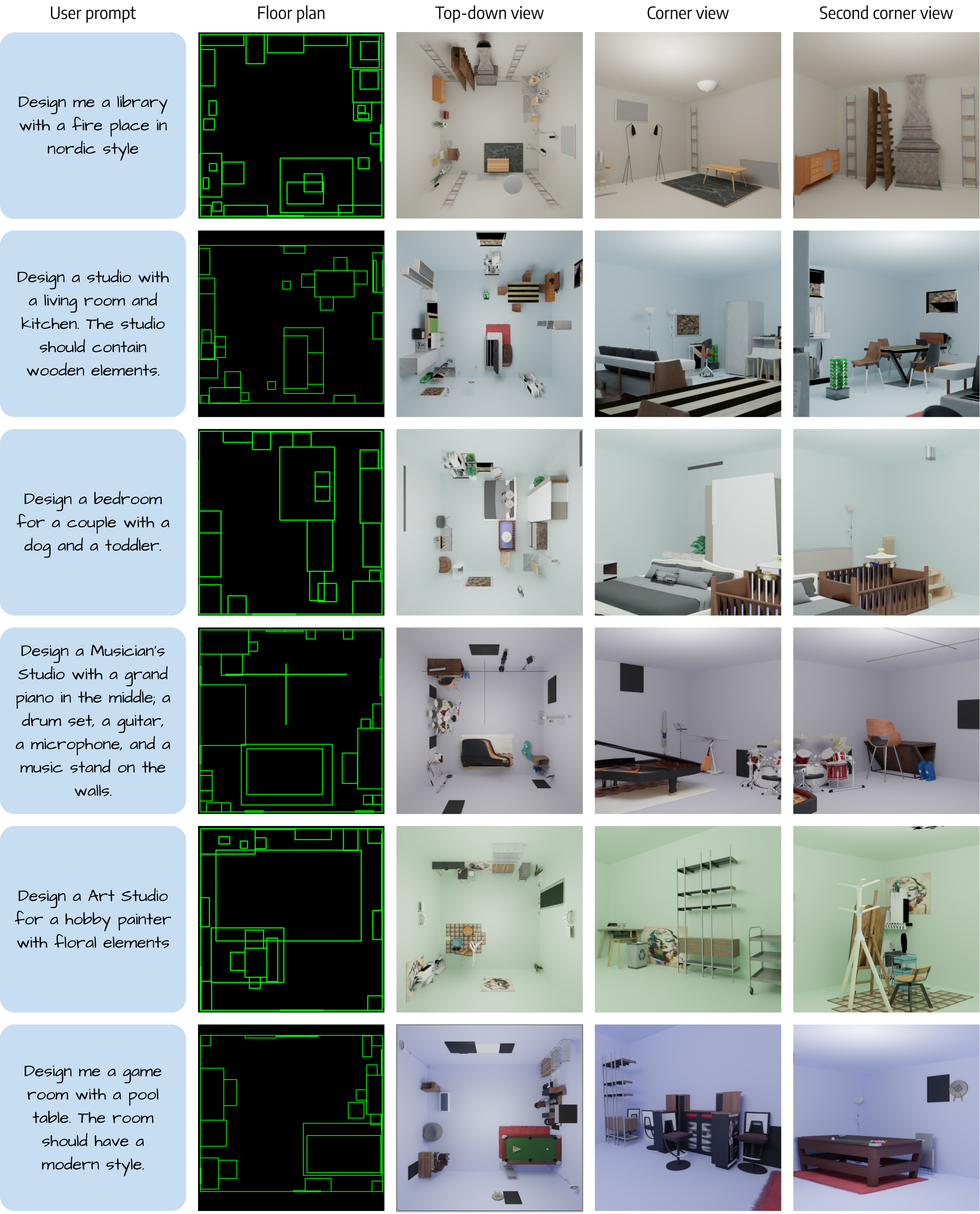

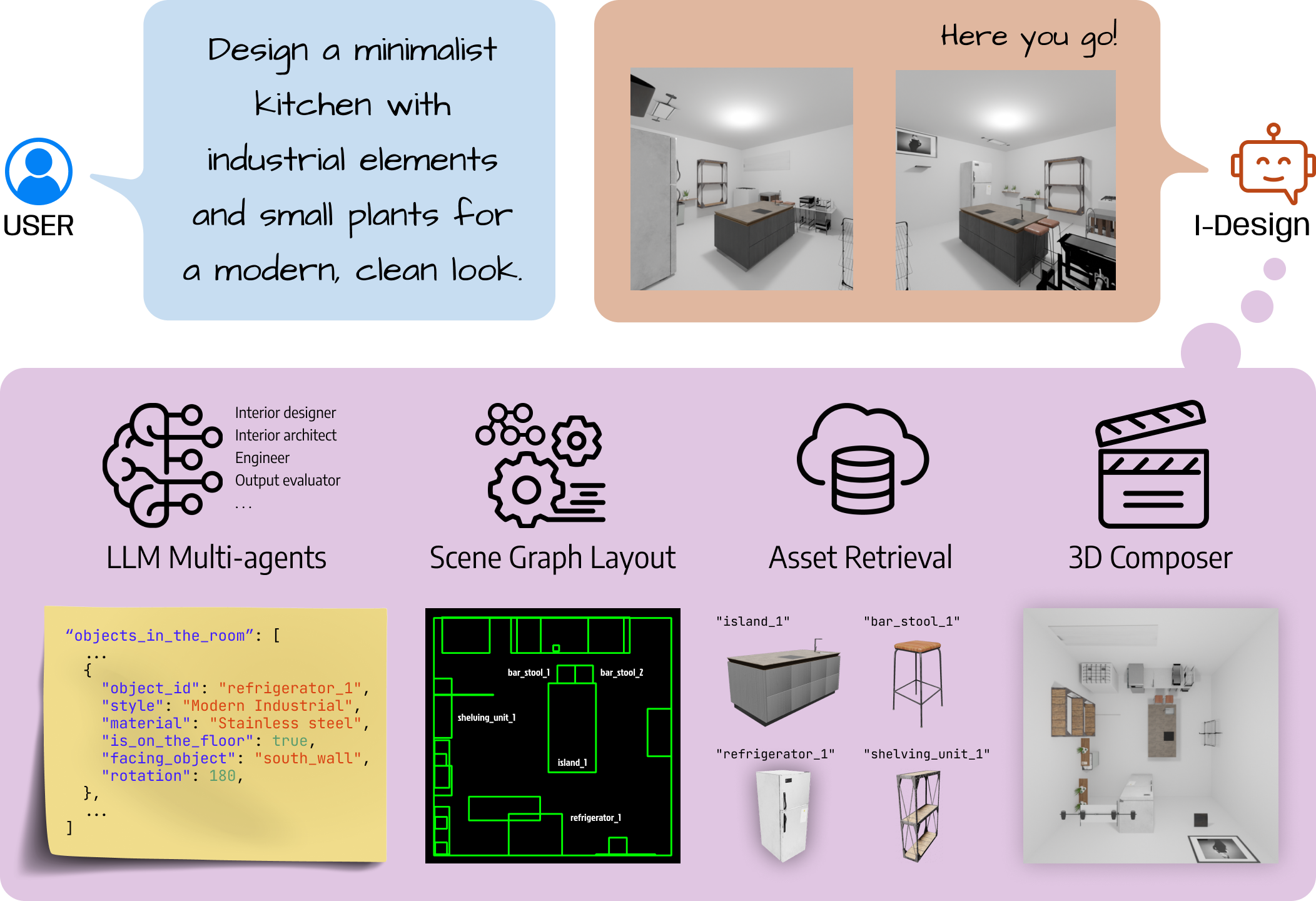

To make it more accessible, we present I-Design, a personalized interior designer that allows users to generate and visualize their design goals through natural language communication. I-Design starts with a team of large language model agents that engage in dialogues and logical reasoning with one another, transforming textual user input into feasible scene graph designs with relative object relationships. Subsequently, an effective placement algorithm determines optimal locations for each object within the scene. The final design is then constructed in 3D by retrieving and integrating assets from an existing object database. Additionally, we propose a new evaluation protocol that utilizes a vision-language model and complements the design pipeline.

Extensive quantitative and qualitative experiments show that I-Design outperforms existing methods in delivering high-quality 3D design solutions and aligning with abstract concepts that match user input, showcasing its advantages across both detailed 3D arrangement and conceptual fidelity.

Method

The I-Design system comprises two primary components: Scene Graph Generation and 3D Scene Construction. The Scene Graph Generation component converts the user's textual input into a scene graph representation. This process involves a collaboration among large language model agents engaging in a dialogue with each other. The final 3D scene is then constructed from the scene graph representation. This is accomplished by executing a Backtracking Algorithm on the generated scene graph. Subsequently, 3D assets are retrieved using multi-modal embeddings from an existing object database and integrated into the 3D scene.

The multi-agents are responsible of analyzing the user's input and generating a scene graph representation, which showcases the objects within the scene and the positional relationships between them. The task of generating the scene graph is achieved through the communication of the agents with one another, where each agent is responsible of a specific part of the generation pipeline. These tasks include identifying the objects to be included in the scene, determining the positional relationships between the objects and outputting the scene graph in a predefined JSON schema.

The role of the Backtracking Algorithm is to convert the relative object representations in the scene graph into absolute 3D coordinates. This is achieved by iteratively placing the objects in the scene in a collision-free manner and ensuring that the objects are not placed out-of-bounds. The relative positions of objects define a bounding box of plausible positions for each object. The Backtracking Algorithm iterates through the objects in the scene graph and places them in the scene by selecting a position within the bounding box of the object. If the object cannot be placed in the scene, the algorithm backtracks and tries a different position. The algorithm continues this process until all objects are placed in the scene or until it is determined that the scene is infeasible. The final 3D scene is then constructed by retrieving 3D assets from the Objaverse database. This is achieved by querying the database with the object names, material and architectural style information from the scene graph. The retrieved assets are then placed into a Blender scene and rendered.

Experiments

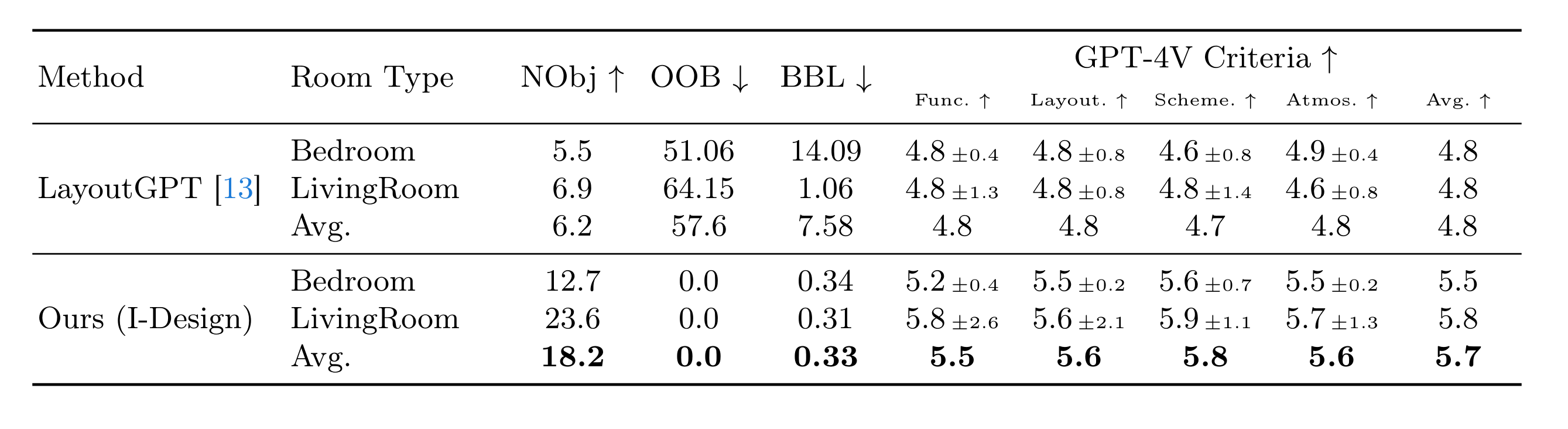

Comparison using the GPT-4V Evaluator

We compare I-Design with an existing Text-To-3D method, LayoutGPT, using the GPT-V evaluator. Previous work has shown the effectiveness of GPT-V in evaluating the quality of 3D scenes generated from textual descriptions. We use the same evaluator to compare the quality of the 3D scenes generated by I-Design and LayoutGPT. The results show that I-Design outperforms LayoutGPT in all grading metrics. As the LayoutGPT method does not accommodate user input, we use a generic prompt for our method to ensure a fair comparison. These prompts are "Design me a living room!" and "Design me a bedroom!".

Furthermore, the Backtracking algorithm used in the placement of the objects ensures that the objects are placed in the scene in a collision-free manner and disallows objects from being placed out-of-bounds. This methodology leads to scenes that are more physically plausible and visually appealing.

Citation

@misc{çelen2024idesign,

title={I-Design: Personalized LLM Interior Designer},

author={Ata Çelen and Guo Han and Konrad Schindler and Luc Van Gool and Iro Armeni and Anton Obukhov and Xi Wang},

year={2024},

eprint={2404.02838},

archivePrefix={arXiv},

primaryClass={cs.AI}

}